Today, most autonomous vehicles rely on sensor fusion, in which multi-sensor data from millimeter-wave radar , lidar , and cameras is analyzed and synthesized according to certain criteria to collect environmental information. As the giants of the self-driving car industry have proven, multi-sensor fusion improves the performance of self-driving car systems and makes vehicle travel safer.

But not all sensor fusions will have the same effect. While many self-driving car manufacturers rely on "target-level" sensor fusion, only centralized pre-sensor fusion can provide self-driving systems with the information they need for optimal driving decisions. Next we will further explain the difference between object-level fusion and centralized sensor pre-fusion, and explain why centralized pre-fusion is justified.

Centralized pre-sensor fusion preserves raw sensor data for more precise decisions

Autonomous driving systems typically rely on a specialized suite of sensors to collect underlying raw data about their environment. Each type of sensor has advantages and disadvantages, as shown in the diagram:

The fusion of millimeter-wave radar, lidar, and camera multi-sensors maximizes the quality and quantity of data collected to generate a complete picture of the environment.

The advantages of multi-sensor fusion over the individual processing of sensors have been generally accepted by autonomous vehicle manufacturers, but this fusion method usually occurs in the "object-level" post-processing stage. In this mode, the collection, processing, fusion, and classification of object data all occur at the sensor level. However, before the data is comprehensively processed, the information of a single sensor is pre-filtered separately, so that the background information required for autonomous driving decision-making is almost eliminated, which makes it difficult for target-level fusion to meet the needs of future autonomous driving algorithms.

Centralized sensor pre-fusion is a good way to avoid such risks. Millimeter-wave radar, lidar, and camera sensors send the underlying raw data to the vehicle's central domain controller for processing. This approach maximizes the amount of information acquired by the autonomous driving system, enabling the algorithm to capture all of the valuable information, enabling better decisions than target-level fusion provides.

AI-enhanced millimeter-wave radar dramatically improves autonomous driving system performance through centralized processing

Today, autonomous driving systems already centrally process camera data. But when it comes to mmWave radar data, centralized processing is still impractical. High-performance mmWave radars typically require hundreds of antenna channels, which greatly increases the amount of data generated. Therefore, local processing becomes a more cost-effective option.

However, Ambarella's AI-enhanced mmWave radar perception algorithms can improve radar angular resolution and performance without requiring additional physical antennas. Raw radar data from fewer channels can be delivered to a central processor at a lower cost by using interfaces such as standard automotive Ethernet . When autonomous driving systems fuse raw AI-enhanced radar data with raw camera data, they can take advantage of these two complementary sensing modalities to build a complete picture of the environment, making the fused result more comprehensive than any information from a single sensor.

Newer iterations of millimeter-wave radar can help reduce costs and dramatically improve the performance of autonomous driving systems. When mass-produced, traditional low-cost radars can cost less than $50 per millimeter-wave radar, an order of magnitude lower than the target cost of lidars. Combined with ubiquitous low-cost camera sensors, AI radar provides acceptable accuracy, which is critical for mass production of commercial autonomous vehicles. The lidar sensor overlaps with the camera/millimeter wave radar perception fusion system running AI algorithms. If the cost of the lidar gradually decreases, it will be used as a safety redundancy for the camera + millimeter wave radar in the L4/L5 autonomous driving system.

Algorithm-first central processing architecture deepens sensor fusion to optimize autonomous driving system performance

Current target-level sensor fusion has certain limitations. This is because front-end sensors all have local processors, which limit the size, power consumption, and resource distribution of each smart sensor , further limiting the performance of the entire autonomous driving system. Additionally, massive data processing can quickly deplete a vehicle's power and reduce its driving range.

Instead, an algorithm-first central processing architecture enables what we call deep, centralized pre-sensor fusion. The technology leverages the most advanced semiconductor process nodes to optimize the performance of autonomous driving systems, primarily due to the technology's dynamic distribution of processing power across all sensors, and the ability to enhance the performance of different sensors and data movements based on driving scenarios. By acquiring high-quality, low-level raw data, the central processing unit can make smarter and more accurate driving decisions.

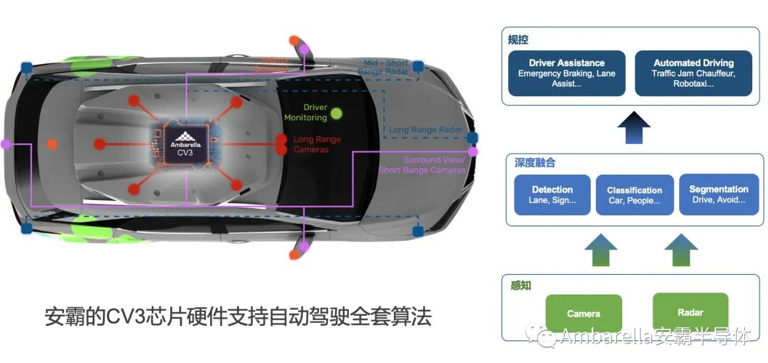

Autonomous vehicle manufacturers can use low-power millimeter-wave radar and camera sensors combined with cutting-edge algorithm-first application-specific processors, such as Ambarella’s recently announced 5nm CV3 AI high-computing domain controller chip , with the best Perception and path planning performance, with the highest energy efficiency ratio, significantly increasing the driving range of each autonomous vehicle while reducing battery consumption.

Don't Ditch the Sensors - Invest in Their Fusion

Autonomous driving systems require diverse data to make the right driving decisions, and only deep, centralized sensor fusion can provide the extensive data needed for optimal autonomous driving system performance and safety. In our ideal model...

1. Low-power, AI-enhanced mmWave radar and camera sensors are locally connected to embedded processors on the periphery of autonomous vehicles.

2. The embedded processor sends the raw detection-level object data to the central domain SoC.

3. Use AI, a central domain processor to analyze the combined data to identify objects and make driving decisions.

Centralized pre-sensor fusion can improve existing high-level fusion architectures, making autonomous vehicles using sensor fusion robust and reliable. To reap these benefits, self-driving car makers must invest in algorithm-first central processing units, as well as AI-enabled millimeter-wave radar and camera sensors. Through multiple efforts, AI manufacturers can usher in the next stage of technological change in the development of autonomous vehicles.